Ready to build your own Founder-Led Growth engine? Book a Strategy Call

Frontlines.io | Where B2B Founders Talk GTM.

Strategic Communications Advisory For Visionary Founders

Actionable

Takeaways

Launch with the minimum feature set that proves your core hypothesis:

Pavelle shipped Doctronic in September 2023 without user accounts—chats disappeared when closed unless users saved them manually. Within days, user requests for persistent chat history validated demand. The insight: your MVP should test one assumption, not solve every user need. If you're hesitating to launch because features are missing, ask whether those features are actually required to validate your hypothesis or just things you assume users want.

Use specificity to unlock early adoption in skeptical markets:

Rather than targeting "healthcare" broadly, Pavelle posted in Facebook groups for specific chronic conditions, offering a free AI backed by clinical guidelines. Half the groups banned them for commercial activity, but the other half engaged immediately. The lesson: in regulated or skeptical markets, narrow targeting with explicit safety mechanisms (clinical guidelines, physician co-founder credibility) converts better than broad positioning. Identify where your skeptics congregate and address their specific objections upfront.

Design system architecture to prevent failure modes, not just tune models:

Doctronic's safety architecture separates AI decision-making from prescription execution. The LLM asks questions and determines renewal safety, but deterministic code outside the AI verifies the prescription exists, checks dosage accuracy, and confirms the schedule. Even if adversarial prompting compromises the LLM, the deterministic layer prevents bad outcomes. Founders building high-stakes AI products should architect multiple independent verification layers rather than relying on prompt engineering or temperature tuning alone.

Target regulatory pain points with quantified deaths and costs:

Pavelle approached Utah with specific numbers: 125,000 preventable deaths annually from medication non-adherence, 30-40% caused by renewal friction, and a $100B economic burden. These statistics—combined with Utah's rural population and physician shortage—made the problem impossible to ignore. When approaching regulators, lead with mortality and cost data that make inaction untenable, not just efficiency gains or convenience improvements.

Regulatory sandboxes require proof of safety methodology, not just technology demos:

Utah's AI Learning Lab didn't just grant Doctronic permission—they required a three-phase oversight structure where human physicians review 100% of initial prescriptions in each medication class, then 10%, then ongoing spot checks. Pavelle also secured AI malpractice insurance through Lloyd's Market before launch. The insight: regulatory innovation offices want risk mitigation frameworks, not promises. Build and fund your oversight methodology before approaching regulators, and treat insurance underwriting as a third-party validation of your safety claims.

Publish clinical validation studies before scaling—they become your regulatory and sales asset:

The study showing 99.2% agreement between Doctronic's AI and human physicians across 500 patient encounters became the foundation for regulatory conversations and public trust. Founders in regulated spaces should budget for formal validation studies early—these aren't marketing expenses, they're the permission structure for everything that follows. Work backward from what regulators and enterprise buyers need to see, then design studies that generate that specific evidence.

Conversation

Highlights

Doctronic: How to Become the First AI Licensed to Practice Medicine by Targeting Regulatory Pain Points

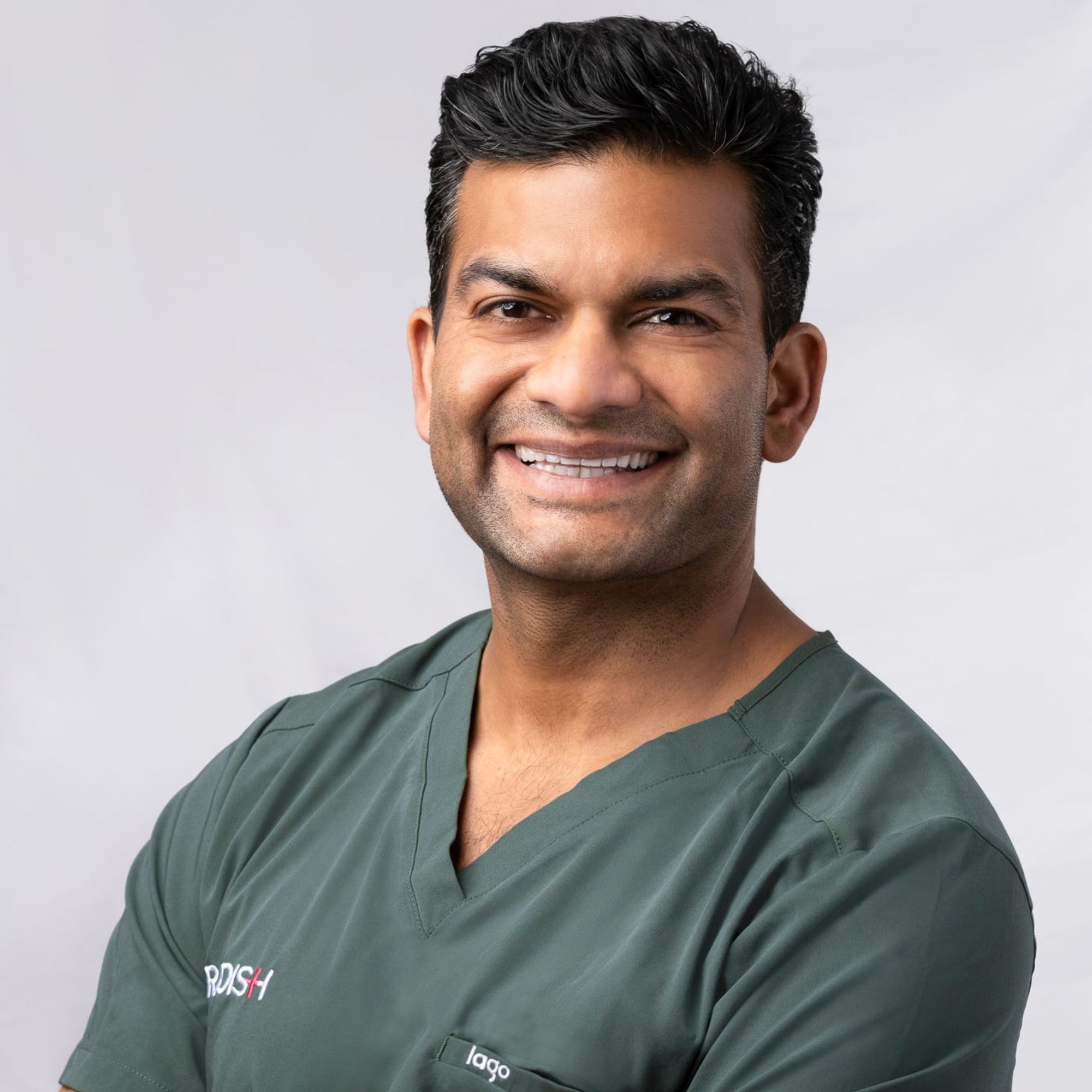

Matt Pavelle’s friends stopped calling him after he got married. They started calling his wife instead—she’s a doctor, and they all had medical questions.

Every single one of them worked at Meta, Apple, Google, or Microsoft. They had excellent health insurance. Yet none could get medical answers quickly enough to matter.

This pattern repeated for a decade before Matt could do anything about it. Then GPT-3.5 launched in 2022, and he saw a path forward.

The Technical Challenge: Making GPT-3.5 Clinically Accurate

In a recent episode of BUILDERS, Matt explained the core problem with early LLMs for medical applications: “It was wildly inaccurate at the time and you had small context windows, it just didn’t work as a product. It wasn’t clinically accurate.”

The solution wasn’t better prompts or temperature tuning—it was architectural. Matt and his co-founder (a physician) built what would become tens of thousands of clinical guidelines written by their doctors. They fed these into the AI using RAG and other grounding technologies.

The system combined multiple models requiring consensus before generating responses. But the critical insight was separating decision-making from execution. “The LLM makes the determination whether it’s safe for you to get the renewal, but that’s not the code that writes the renewal,” Matt explains. “It’s deterministic code outside of the AI, where it actually looks up and verifies that you do have that prescription and that is the right dosage, it is the right schedule.”

This architecture means even successful adversarial attacks on the LLM cannot result in incorrect prescriptions. The verification layer operates independently.

Launch Strategy: Facebook Groups and Disappeared Chats

Matt launched Doctronic in September 2023 without user accounts. Conversations disappeared when users closed them. No persistence, no save functionality, nothing.

The launch channels: minimal Google Ads spend and posts in Facebook groups for chronic conditions. “I think half of the Facebook groups immediately banned us,” Matt recalls. “They said, oh, no commercial activity.”

The other half engaged immediately. Within days, users requested the ability to save conversations. That single feature request validated the entire product hypothesis.

Most founders would have delayed launch to build account infrastructure. Matt proved you don’t need it to validate demand—you just need the core value proposition working.

The Clinical Study as GTM Asset

By July 2024, Doctronic published a study examining 500 patients. Each patient talked to the AI, then saw one of Doctronic’s human physicians (the company operates a full primary care practice licensed in all 50 states).

The results: 99.2% agreement on treatment plans. Zero hallucinations.

This wasn’t a marketing exercise—it became the regulatory currency for every conversation that followed. When approaching Utah’s AI Learning Lab, Matt had third-party validated proof of clinical accuracy. The study did more to enable their business model than any amount of sales collateral could have.

Finding the Regulatory Wedge: 125,000 Deaths Annually

Matt identified medication non-adherence as the entry point. The numbers were impossible to ignore: 125,000 preventable deaths every year, $100 billion in economic costs, with 30-40% of the problem caused purely by prescription renewal friction.

“80% of prescriptions that are written are for people that have chronic conditions,” Matt explains. “Those prescriptions have to be renewed. And when they are not renewed, you have medication non-adherence.”

The regulatory logic was airtight: people with chronic conditions need their medications. Real doctors have already prescribed them. The prescriptions need administrative renewal. No doctor wants to do this work—”oftentimes they can’t charge for that. It’s just an administrative task to them.”

This created the perfect conditions for regulatory innovation: clear mortality data, economic burden, administrative waste, and physician burnout all pointing to the same problem.

Navigating Utah’s AI Learning Lab

Matt approached Utah’s AI Learning Lab, a regulatory sandbox within the Department of Commerce designed to allow AI companies to test theories by temporarily mitigating certain laws.

The pitch was direct: “We can safely solve this or we believe we can safely solve this. Let us prove this to you. And we’ll only charge $4 for the renewal fee.”

Utah’s requirements weren’t ceremonial. The three-phase oversight structure required human physician review of 100% of prescriptions for the first 250 in each medication classification, then 10% review, then spot checks at an undefined percentage.

This methodology wasn’t negotiable—it was the price of admission. Matt built it into the product architecture before approaching regulators.

Securing AI Malpractice Insurance Through Lloyd’s Market

Before Utah would approve the program, Matt needed AI malpractice insurance. This had never been done before.

“You have to go to the Lloyd’s Market because that’s the only place in the world where you can get anything insured. Someone there will do it,” Matt says. The process took time, but it provided third-party validation of Doctronic’s safety architecture.

Insurance underwriters are professional risk assessors. Getting them to write a policy meant convincing sophisticated actuaries that the system architecture, oversight methodology, and clinical validation made the risk acceptable.

December 15, 2025: Soft Launch

Doctronic launched prescription renewals in Utah on December 15, 2025, initially to “friendlies and friends of friendlies” to test the system. The public announcement came this week.

“This is the first time that an AI company is licensed to practice medicine,” Matt confirms. Technically, the company holds the license through Utah’s regulatory sandbox, where laws requiring only licensed physicians to practice medicine have been temporarily mitigated for this specific use case.

Other states have already reached out. Arizona and Texas both have AI regulatory sandboxes. International governments are exploring similar programs.

The Expansion Playbook

Matt’s next targets are clear: states with existing AI regulatory sandboxes and use cases beyond prescription renewals. “There are probably some other safe things that we can do with AI outside of just prescription renewals,” he notes. “For example, there are probably some use cases for some relatively safe other prescriptions, like antibiotics.”

Lab and imaging orders represent another category where AI could safely reduce physician burden.

But the core strategy remains the same: identify administrative tasks that cause measurable harm when not completed, prove AI can safely handle them with physician oversight, and approach regulatory sandboxes with mortality data and structured oversight methodologies.

What B2B Founders Can Learn

Doctronic’s path to becoming the first licensed AI doctor offers a tactical framework for navigating regulated markets:

Lead regulators with mortality and cost data that make inaction untenable. Matt didn’t pitch efficiency—he pitched 125,000 preventable deaths.

Publish clinical validation before approaching regulators. The 99.2% agreement study became the foundation for every regulatory conversation.

Design safety into system architecture, not prompts. Separating AI decision-making from deterministic verification prevents entire classes of failures.

Secure third-party validation through insurance underwriting. Getting Lloyd’s Market to write a policy proved risk mitigation to regulators.

Target regulatory sandboxes explicitly designed for innovation. Utah’s AI Learning Lab exists to test new models—Matt used it exactly as intended.

Build oversight methodologies before asking permission. The three-phase review structure wasn’t a concession—it was part of the initial proposal.

Matt’s journey from observing a decade-old problem to becoming the first AI licensed to practice medicine demonstrates that “impossible” regulatory barriers often have structured paths forward—if you’re willing to build the safety architecture, generate the clinical evidence, and find the regulatory partners designed to enable innovation.

The lesson for B2B founders in regulated industries: don’t assume traditional paths are your only option. Regulatory sandboxes exist. Use them.